I’ve been working on our imaging process and one thing has always bothered me. I use a modified version of this script from TheITBros that removes the Windows Media Player icon from the taskbar from users who login.

Normally, the way to implement this is to place it inside of the Startup folder in Administrator’s profile during audit mode and then sysprep with copyprofile.

However, the imaging system I’m working on is slightly different because we build images for multiple companies. I have my autounattend inserted directly into the Windows ISO so that I can instruct the person building the image to “install a PC with this ISO and then customize from there, running sysprep when you’re done.” That way, I know that the image being built is at least starting the right way.

There are several files that I want in all of the installs that happen this way and for the most part, I’m able to mount the WIM file, insert it, and commit the changes.

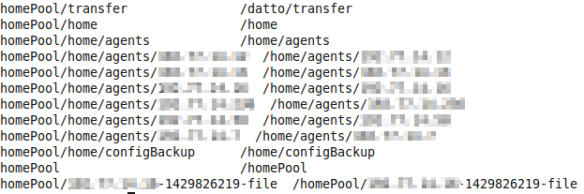

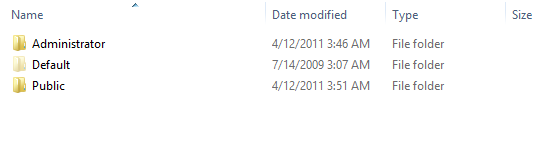

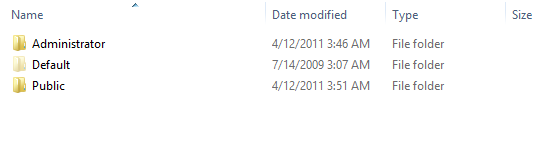

However, if I browse to C:\Users in the default install.wim, I see the following:

I had a few thoughts here:

- When I’m logged in Administrator’s profile becomes the Default profile, so what will happen if I place the file in Default?

- Neither Default profile doesn’t actually have a Startup folder in the start menu (Administrator does)

- The script deletes itself after it runs. Is that going to break copyprofile?

Obviously, this is going to require some testing. To see what will happen, I’m going to forget about the script for a moment (remember the self-delete) and place a file called default.txt in the Startup folder for Default and a file called Administrator.txt for the profile called Administrator. For Default, I created the Startup folder manually since it doesn’t exist in the default WIM.

Side Note: UAC didn’t prompt me when copying to the Administrator profile, but did when copying to Default. That’s a good topic for another post.

Next, I’ll commit the WIM and image a PC with it to see what happens. In case you’re not familiar with dism, here’s the commit command (Note, dism needs to be run from an elevated cmd.exe):

dism /Unmount-Wim /Mountdir:D:\Mount /Commit

That trailing commit is a question on every certification exam ever.

Next, I’ll create an ISO from the unpacked Windows image with oscdimg. I had to look this up. As always, the TechNet page is fully detailed and so verbose that I wasn’t sure what to do.

Here’s the command to create the ISO:

oscdimg -n -m -bD:\matt\downloads\ISOs\Windows7x64\boot\etfsboot.com D:\matt\Downloads\ISOs\Windows7x64 d:\Win7.iso

Based on that TechNet article, I suspect there are other ways to do this, but this has worked correctly for me.

With the ISO created, I created a test VM and assigned the image to the DVD drive and let Windows install (thankfully, this requires no user input, so I went to get a drink during the process). This is the really fun part of working with imaging – waiting for Windows to install 100 times over. Usually, I can use VM snapshots to help with this kind of testing, but it does me no good for testing automated installs.

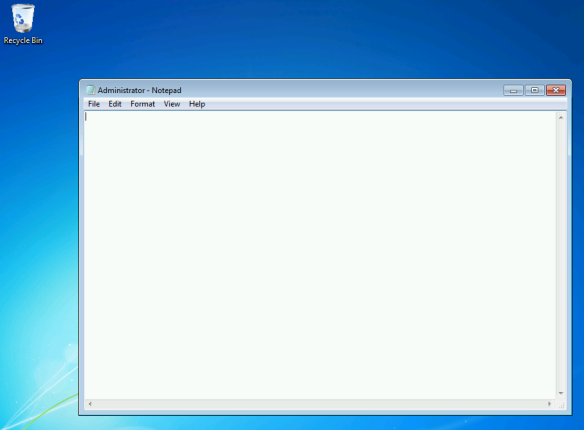

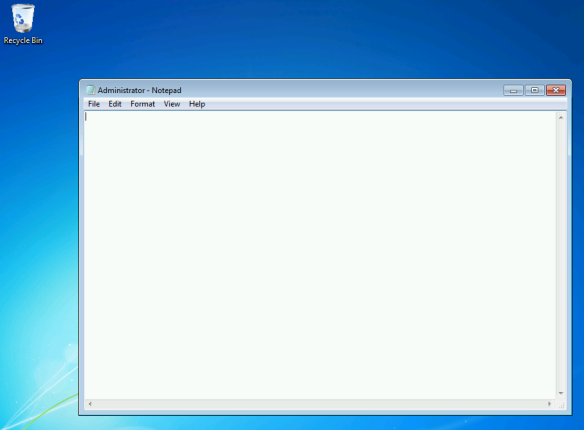

Once the image install finished, I found the following:

So this tells me two things:

- The folder named Administrator will be the default folder in Audit mode.

- Anything placed in Startup within the WIM will be run on first boot (this is before sysprep)

- If I put the vbscript in there, it would probably delete itself.

The reason I say probably here is because at this time, I’m not sure whether this is the default profile or a copy of it. To find out, I checked out the Default folder in Windows Explorer:

What happened to default.txt remains a mystery – it’s completely gone. Just to make sure that I wasn’t looking at an NTFS junction or similar, I saved some text to the copy of this in the Default folder and verified that it did not reflect in the other location. It didn’t.

Now that I know where this file needs to be placed, the next step is figure out how to get it there without it executing and deleting itself. The difficulty here is illustrated best in the help file that comes with WSIM about the copyprofile option:

CopyProfile runs during the specialize configuration pass and should be used after you make customizations to the built-in administrator profile.

The issue is that my script is going to disappear when it runs and so even though it will be in audit mode’s default profile, sysprep is going to replace that whole profile with (simplifying) the contents of C:\Users\Administrator which won’t have the script in it.

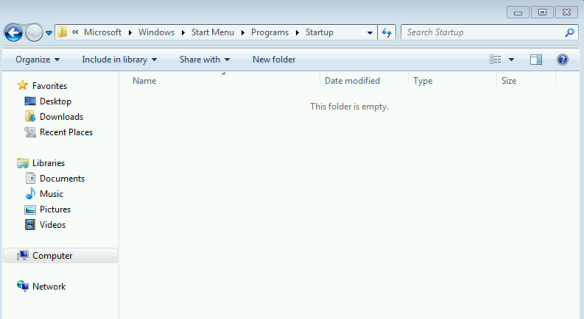

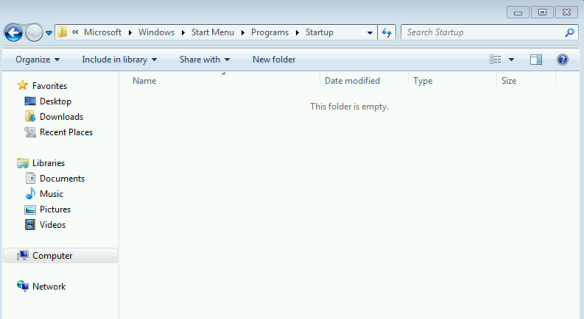

Let’s verify this. I shutdown the imaging PC and remounted the WIM then replaced the text files I had earlier with the taskbar script (also removing the extra startup folder I created). After committing this image, creating a new ISO and letting Windows setup run, here’s what we have:

As expected, an empty startup folder. Not shown in this screenshot is that the script did rearrange the taskbar as I had wanted it to.

Finally, back to the normal world of snaphots helping with testing. I’m going to snapshot here before running sysprep as I test how to actually make this behave how I want it to. As the help for the copyprofile option mentions, I have a separate XML file to pass to sysprep, separate from the one used to install Windows. In the WIM I keep that in the sysprep folder so that someone following the process just has to run one command. In this case, I’ll modify it on my host and replace it.

I think that the solution here is to put my vbs file right on C: and then move it to the default profile during the specialize phase, but I’m not sure if at that point in time I need to move it to Administrator’s profile or Default. I copied the vbs to C: and added a move command the the xml file to copy it to Administrator’s profile during phase 4. Here’s the result:

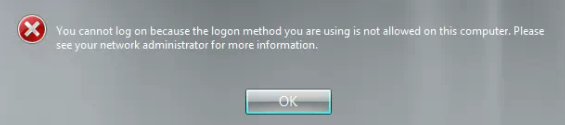

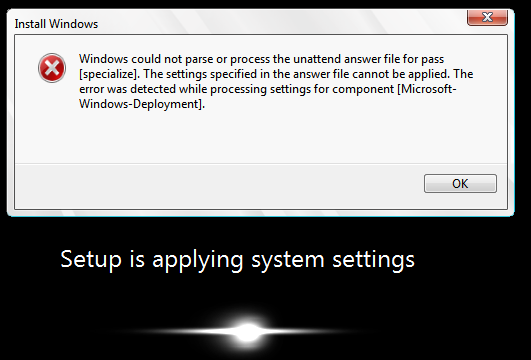

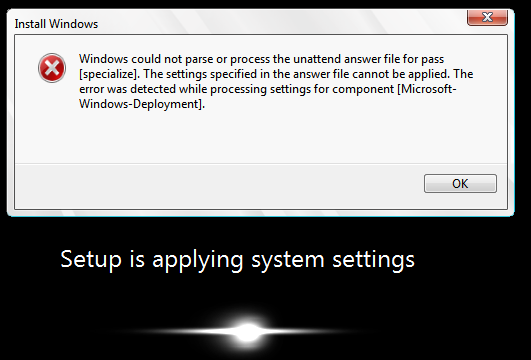

I used a very basic unattend.xml file with essentially only the move command in it. This went through sysprep and came up with the following error:

Given that this is the component and phase where I’m copying the file, it seemed obvious that that was causing the problem. While this message was up, I opened a command prompt and checked the log file. The following error was logged:

Hit an error (hr = 0x80070002) while running [md “C:\Users\Default\AppData\Roaming\Microsoft\Windows\Start Menu\Programs\Startup”]

0x80070002 is “no such file or directory”. In the open command prompt window, I checked and to my surprise, the folder existed. Clearly, I was losing my mind.

As an aside, the log file made it clear that the profile was copied before these commands are executed, which is something I was not sure about.

The first, and most obvious check was to double check the command I have in my answer file. It was correct. Then I noticed that the time stamp showed an older modification time than when the sysprep was running. It actually looked like around the time Windows was installed initially on this VM.

If that’s the case, then md (mkdir) is blowing out because the directory already exists. I don’t know of any switches to md that tell it to ignore a directory already existing, short of writing a batch file for this, so let’s just remove the directory creating line from the unattended file.

Same error, but on the move command. I did a quick Internet search to see if anyone has seen this problem before and came across this thread. That’s a good point. Is move also a shell builtin? I found lots of articles indicating that md was, but nothing for move. However, a search for the executable turned up nothing, so I thought I’d give this a shot:

cmd /c move C:\SetTaskbar.vbs C:\users\Default\AppData\Roaming\Microsoft\Windows\Start Menu\Programs\Startup

That caused my unattend to run. I had to go through Windows Welcome because there was basically nothing but the move command in the answer file. Next, I checked to see if the vbs copied where I wanted it to.

Nope, nothing there. Also, the SetTaskbar.vbs command is still sitting in C: Did this just not run at all? I feel like I’m going through a lot of effort to make this work correctly. Taking a look at the log, cmd returned successfully, but it pretty much always will in this case. Looking at my command above, there’s not a quote in there (Start Menu and the space). Let me add that and run sysprep again.

That worked! The script ran in Administrator’s context when I logged on. Next, let’s see if it actually did end up in the default profile by creating another user and logging on. That worked correctly, too. I did have a prompt before it ran, but that was only because I had downloaded it from Dropbox on the web and there was an alternate data stream.

To recap, here’s what I was trying to do and what worked:

- The taskbar script deletes itself when running, so I can’t put it in the default profile in the WIM

- To work around the above, I decided to move it to the default profile from C: during sysprep

- I need to move it into Default rather than Administrator because the move command will run after copyprofile

- move is a shell builtin (as is mkdir) so I need to run the command with cmd.exe /c

- The final command is cmd /c move C:\SetTaskbar.vbs “C:\users\Default\AppData\Roaming\Microsoft\Windows\Start Menu\Programs\Startup”